participants

Prior to data collection, informed consent was obtained from the participants according to the guidelines set and approved by the Ethical Research Committee of the National University of Computer and Emerging Sciences, Islamabad, Pakistan. After data collection, data were anonymized and any identifying information removed according to guidelines provided by the Ethics Review Committee.

A total of 24 participants took part in this research study, of which 12 smartphone users were chosen to act as smartphone owners and 12 were chosen to act as their close adversaries. Participants were required to own an Android smartphone with API level 23 or higher and to have basic knowledge of smartphone usage. Participants were asked to fill out a consent form to participate in the research work. Details regarding data collection were provided on the form. Additionally, basic demographic information about the participants (name, gender, age, occupation) was recorded. In this study, eight users were male and four were female. The user’s age is \(18-28\) Average 24 years. Fifteen users were students (7 engineering students, 5 computer science students, 2 psychology students, and 1 MBA graduate), 3 were teachers, and 2 housewives also participated in the study.

To gain access to a smartphone, one close adversary per user was required to act as an attacker. Seven of the user’s enemies were close friends and five were siblings. Four users played antagonists to each other. They were close friends or roommates.

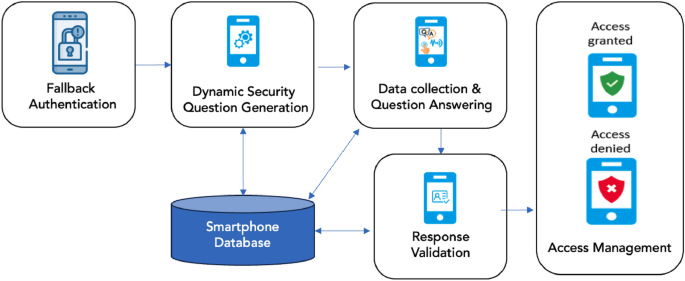

research design

This study has two parts. The first part collected data from the user, and the second part collected data from the adversary. Two data collection applications were developed: (i) for smartphone usage data collection and (ii) for inertial sensor data collection. Users had to answer security questions every day. The number of questions is based on data available within the past 24 hours, with a maximum of 10 questions per category. Data from the adversary was collected once a week. The attacker had to use the primary user’s phone to answer security questions. Because it would be difficult for every attacker to access each user’s phone every day, security questions only needed to be answered once a week.

Descriptive statistics of collected data

Data were collected for 28 days. Overall, users asked 6625 questions \((n = 12)\)996 questions asked by adversaries \((n = 12)\). The greatest number of questions were asked from the application usage category and the least number of questions were asked from the SMS category. Table 3 shows the number of questions asked by users and attackers by category.

Dynamic secret question accuracy

Users returned correct answers ranging from 93.70% to 100% (average 95.76%), while adversaries returned correct answers ranging from 12.36% to 97.56% (average 28.51%). Among user responses, the highest accuracy was observed for the battery charging event category (100%), while the lowest accuracy was observed for the SMS category (93.7%). Similarly, among the adversary responses, the highest accuracy was observed for the billing event category (97.56%), while the lowest accuracy was observed for the SMS category (12.36%). The ability to accurately recall charging events can be attributed to the fact that almost all users charged their smartphones using a single type of charger: an AC adapter (direct charging).

To calculate question accuracy, a confusion matrix is created using the percentages of correct and incorrect answers. True positives (TP) are correct answers provided by the user, and false negatives (FN) are incorrect answers provided by the user. For the user, a false positive (FP) is the correct answer provided by the attacker, and a true negative (TN) is the incorrect answer provided by the attacker. Specifically, the accuracy (A) is given by:

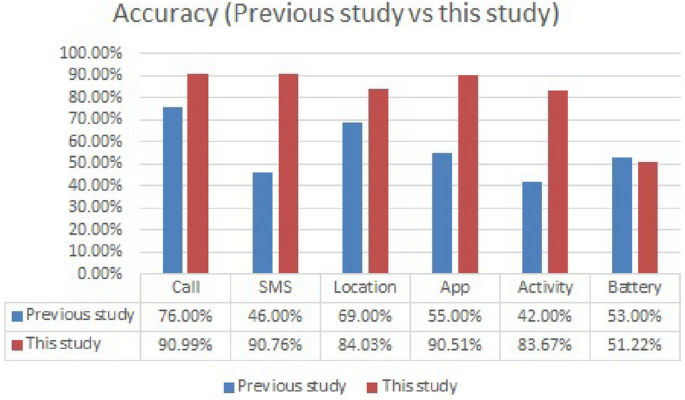

\(Accuracy (A) = \frac{(TP+TN)}{(TP+TN+FP+FN)}\). The confusion matrix is shown in Table 4. Here, the values for true positives, true negatives, false positives, and false negatives are shown as percentages. Accuracy is calculated for each category. The battery charging event category performed the worst. \(51.22\%\) Accuracy and call category performed best. \(90.99\%\) Accuracy. The accuracy of the three categories is \(90\%\) This includes calls, SMS, and application usage, while the other two categories are \(83\%\) Accuracy. According to the results, a combination of the top three categories, including calls, SMS, and application usage, is the best for user authentication. The average accuracy for these three categories is: \(90.75\%\).

We compare our findings with a study conducted by.2This comparison shows that our study significantly improves the accuracy of all categories except the battery category. In this study, the battery category performed the worst because the answer only asked for the type of charger, making it very easy for an attacker to guess.

All questions are multiple-choice type questions, allowing users to randomly guess the answer. There is \(\frac{1}{4}\) (0.25) Any user can randomly guess the correct answer 25% of the time. Using a combination of seven questions, this probability decreases by: \((\frac{1}{4})^7\) (0.000061) 0.006%. For comparison, the guessability of a 4-digit PIN is: \(\frac{1}{10000}\)(0.0001) 0.01%17.

Therefore, you can ask 10 questions to authenticate a user. A correct answer will be assigned 10 points and an incorrect answer will be deducted by 30 points. If the final score is positive, the user gains access. Otherwise, access is denied. According to this point-based method, the user has to provide at least eight correct answers. A maximum of 2 incorrect answers out of 10 are accepted. If the user provides three or more wrong answers, the final score will be negative (e.g. 7 x 10 = 70, 3 x -30 = -90, 70 -) 90 = -20), the access will be Rejected. Experiments show that the most preferred categories are calls, SMS, and application usage, and the overall accuracy is above 100. \(90\%\)more accurate than physical activity or location. \(83\%\). The charging event category is not considered because it has the worst performance and the inference ability for this category is very high.

Comparison with our proposed study2.

behavioral biometrics

For behavioral biometrics, data was collected only when the user and adversary answered the security questions with a 0.05 ms delay. A total of four classifiers are considered, including NB (Naive Bayes), K-Nearest Neighbors (KNN), MLP (Multilayer Perceptron), and RF (Random Forest). All these classifiers are implemented using Weka33Use default parameters. The frequency of data collection is critical to the results. When data collection is very infrequent, all classifiers perform worst, and when data collection is very frequent, garbage data is generated and classifiers tend to overfit.17,18. This study found that a frequency of 0.05 milliseconds gave the best results.

In this study, the true positive (TP) is actually from the user, and the classifier also classifies it as a user. A true negative (TN) is one that actually comes from an adversary and the classifier also classifies it as an adversary. A false positive (FP) is an instance that is classified as a user by the classifier when it actually comes from an adversary. Finally, false negatives (FNs) are instances that actually come from the user, but are classified by the classifier as an adversary. Accuracy is calculated as: \(Accuracy = \frac{(TP)}{(TP+FP)}\)

The results obtained using the KNN classifier are shown in Table 6. Accuracy ranges from 0.867 to 0.973; Accuracy ranges from 0.867 to 0.973. \(91.55\%\) to \(98.25\%\). The results obtained using the NB (Naive Bayes) classifier are shown in Table 5. Accuracy ranges from 0.837 to 0.904; precision ranges from 0.837 to 0.904. \(86.45\%\) to \(93.85\%\). The MLP results are shown in Table 7. Accuracy ranges from 0.865 to 0.980; precision ranges from 0.865 to 0.980. \(92.15\%\) to \(98.85\%\). The results of the RF (Random Forest) technique are shown in Table 8. Accuracy ranges from 0.862 to 0.982; Precision ranges from 0.862 to 0.982. \(91.20\%\) to \(99.00\%\).

The averages for all users for all classifiers are shown in Table 9. This has a precision (0.868) and a precision (\(91.20\%\)) of NB is the smallest, but precision (0.955), precision (\(97.32\%\)MLP) is the best. However, note that all classifiers provide good results. MLP and RF are very close in terms of precision and accuracy. NB provides slightly lower accuracy of 0.868. \(91.20\%\)Accuracy. However, it should be noted that NB takes much less time to perform the task compared to all other techniques. The trade-off between accuracy and processing time is very important. The more accurate the better, but the time the process takes is also very important for user experience. Our research results are compared with17 Table 10 shows that this study significantly improves the results using all classifiers.

Our research results show that classifiers perform well when distinguishing between real users and adversaries, but it also depends on the frequency of data collection and the data available for training. 28 days of data were used for the users and 4 days of data were used for the attackers.

discussion

Our findings showed that questions from the battery charging event category performed the worst. \(51.22\%\) The guessing ability for this question was very high, which increased the accuracy. Questions from the call category are most effective when: \(90.99\%\) Accuracy. All questions were multiple choice questions with four options. To reduce the random guessability of the answers, you need at least 7 questions to bring the guessability closer to a 4-digit PIN. Ten questions are recommended, and users must enter at least eight correct answers to authenticate. In this study, we compared the performance of NB, KNN, MLP, and RF (Random Forest). The minimum precision of NB is: \(91.20\%\) Above all, MLP provides the highest accuracy. \(97.32\%\). However, turnaround time is also very important for user convenience, so NB has a significantly lower turnaround time than all other classifiers.

Research limitations

Our experiment was limited to a small number of participants due to time constraints. However, for smartphone authentication systems designed for large-scale deployment, it is important to include a larger and more diverse pool of participants from different countries to ensure the effectiveness of the proposed approach. . Because our solution is based on user responses, it is inherently flexible and can be tested repeatedly with different participant groups.