Like clockwork, last month marked the beginning of fall. It’s the season of new colors, cozy vibes, and all-new iPhones. Since launching the iPhone in 2007, Apple has released a refreshed model every year, often in September, and last month released the iPhone 16, the 18th generation of the Cupertino-based brand’s best-selling product. iPhone 16 Pro has been announced. – In fact, as reported by Forbes, the iPhone is the world’s best-selling smartphone.

Apple’s success with the iPhone is partially due to the company’s ability to consistently evolve its products through design, software, and hardware updates. One of them, Photo Styles, is a brand new feature just introduced in the iPhone 16 series. It’s clear that Apple is committed to iPhone photography, but what exactly are photo styles? And why is Apple so excited about them? And…are they just Isn’t it the filter?

The short answer to the last question is “no.” The longer answer is that until now, it has been impossible to perform functions like photo styling on mobile phones because the technology required for such complex computational models was not ready. But now that it is, we asked Pamela Chen, Apple’s chief aesthetic scientist for camera and photography, to explain the difference between filters and photo styles. She said there are three important differences between the two. [Photographic Styles] This means that this feature is powered by AI trained to understand contextual information (such as the difference between skin tone and the sky, and the ability to apply effects to these individual elements of the photo) . Ability to learn and adjust according to circumstances. “This is very different from a filter that is essentially semantically agnostic,” Chen added, citing an example where a filter is applied to an entire image without considering nuance. The second and third reasons why photo styles differ from filters are that users can “see the style applied in real time” before taking a photo, and have the ability to “adjust intensity” on screen. said Mr. Chen. Apply changes to your photos in a completely reversible and non-destructive way.

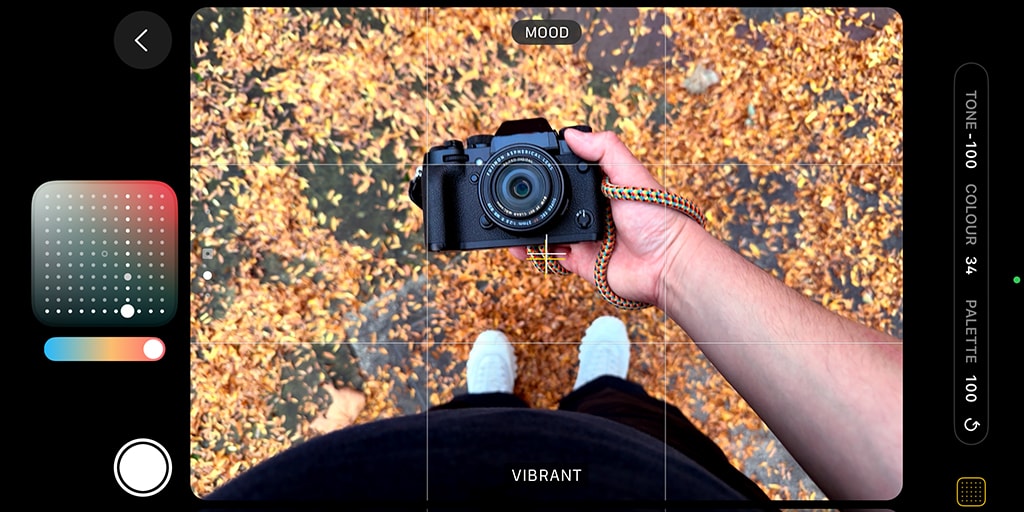

We’ve been using the iPhone 16 Pro Max for the past few weeks to try out new camera features. Choose from 15 photo styles, from the delicate Cool Rose to the deep contrast of Stark B&W. The user interface is well designed and easy to use, with easy swipes to move between different styles, and users can adjust both tone and color for each style. There is also a slider that users can dial in comprehensively. Increase or decrease the intensity of certain styles. All in all, Apple has made what is undoubtedly an incredibly complex machine under the hood seem incredibly simple and easy to use. And Chen said,She confirms this by saying, “As part of my job at Apple, I delve into the history of photography.” “It’s important to do that,” he said. ”

Apple has also tapped various artists to highlight new camera features in the iPhone 16 series, including Ethereal, one of the 15 photography styles, during a recent shoot in New York. This includes fashion photographer Sarah Silver, who said she “bringed out the fun” on set.

This year’s iPhone is exactly the same as last year’s iPhone, and it’s no exaggeration to say that the range of changes between models has narrowed in recent years. Updates are now more iterative and focused on specific areas. That said, since the introduction of the first iPhone and its separate 2-megapixel camera (which, by the way, sells for six figures if you have a sealed camera hidden somewhere) , we have come a long way, we have come a long way. We’ve just scratched the surface of this new era of AI-driven photography, and Apple is leading the way in mobile photography.

As smartphones continue to evolve, we asked Pamela Chen what she thinks the future holds for traditional cameras. She remembered something someone had said to her once. “The best camera is the one you have.”