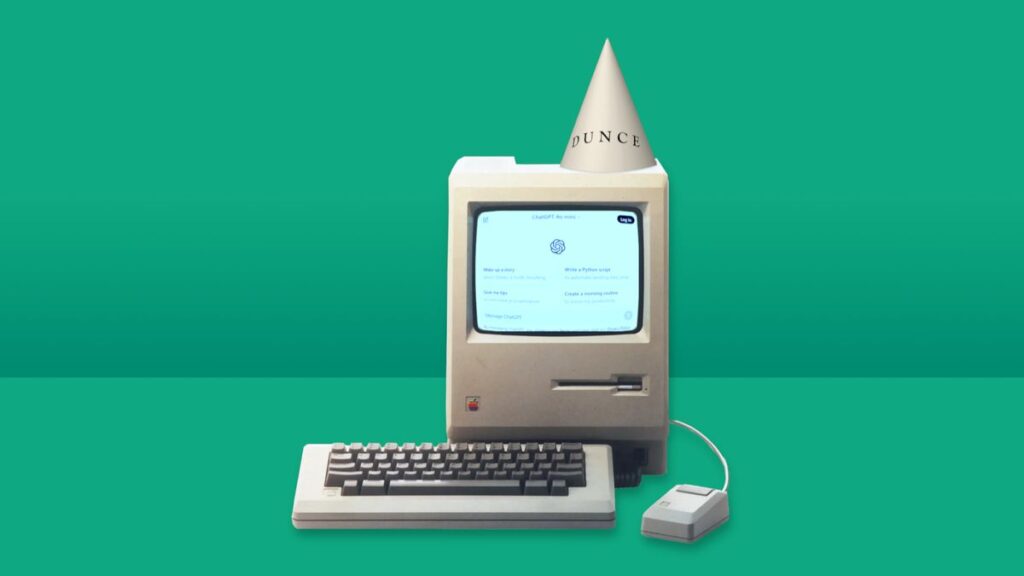

Is ChatGPT getting smarter, or is it trying to look smarter? According to Apple, it’s the latter.

Apple’s team of AI researchers Published a paper This weekend, he claimed that most of the leading large-scale language AI models, no matter how smart they seem, are actually incapable of advanced inference.

Large-scale language models (LLMs) like ChatGPT seem to become more advanced and “intelligent” over the years. But internally, their logical reasoning has not improved much. According to Apple’s research, current LLM functionality “may be more similar to sophisticated pattern matching than true logical reasoning.”

What does this research mean for the reality of today’s top AI models? Before building smarter AI models, it may be time to focus on creating safer AI models. yeah.

Apple AI researchers found LLMs struggle with elementary school math

Apple’s team of AI researchers has revealed results from GSM-Symbolic, a new benchmark test that poses entirely new challenges for large-scale language models.

This test revealed that today’s top AI models, despite how intelligent they appear, have limited inference capabilities.

In fact, the GSM-Symbolic test revealed that the study’s AI model struggled with basic elementary school math problems. The more complex the questions, the worse the AI performed.

“Adding information that is seemingly relevant to the problem’s reasoning, but is ultimately unimportant, results in a significant increase of up to 65% in all state-of-the-art models,” the researchers wrote in their paper. A significant performance degradation occurred.

“Importantly, we demonstrated that LLMs struggle even when provided with multiple examples of the same question or examples containing similar irrelevant information.”

This means that today’s leading AI models are easily confused by logic-based questions, such as math problems. They rely on copying math problem patterns into their training data, but they struggle to do so. do Do mathematics the way humans can. This shows that large language models just look smart, but are actually very good. acting Smart.

This echoes comments from OpenAI CEO Sam Altman. Claims AI is actually ‘incredibly stupid’ In its current state. OpenAI is the company that developed ChatGPT, and Altman was ambitious in his pursuit of artificial general intelligence capable of true logical reasoning.

Apple’s research seems to agree. “We believe further research is essential to develop AI models capable of formal reasoning that go beyond pattern recognition and enable more robust and generalizable problem-solving skills,” they conclude. I am.

AI may not be smart yet, but it can still be dangerous

If research published by Apple’s AI team is accurate, today’s leading large-scale language models would struggle to withstand episodes like this: Are you smarter than a 5th grader?. However, that doesn’t mean AI can’t still be a powerful tool, one that can be both incredibly helpful and harmful. In fact, Apple’s research reveals a core strength and potential danger of AI: its ability to imitate.

LLMs like ChatGPT may appear to be able to reason like humans, but as this study points out, that’s just AI copying human language and patterns. Although it may not be as sophisticated as actual logical reasoning, AI has become very good at imitating others. Unfortunately, malicious actors have been quick to take advantage of every advancement.

For example, this weekend’s tech YouTuber Marques Brownlee. Published in X Brownlee was not affiliated with the company, which reportedly used AI to recreate Brownlee’s voice in advertisements for its products. However, the AI-generated decoy bears a striking resemblance to Brownlee’s real voice. The ad was clearly intended to deceive viewers into believing that Brownlee endorsed its product.

It’s happening. There are actually companies out there that just use AI-generated rips of my voice to promote their products. And there are no consequences other than being known as a shitty shady company willing to stoop so low to sell a product pic.twitter.com/Y12XGKNqFOOctober 14, 2024

Unfortunately, incidents like this are becoming more and more common. Taylor Swift’s Fake Presidential Endorsement Regarding Scarlett Johansson’s claims, OpenAI copied her voice without her permission.

While the average user may not think that these controversies affect them, they are arguably the most important aspect of the AI industry. It’s great that basic tools like ChatGPT and Gemini can help so many people.

However, the state of AI also abuse Because deepfakes, deception, and fraud, intentionally or unintentionally, pose significant risks to the safety of this technology and everyone involved.