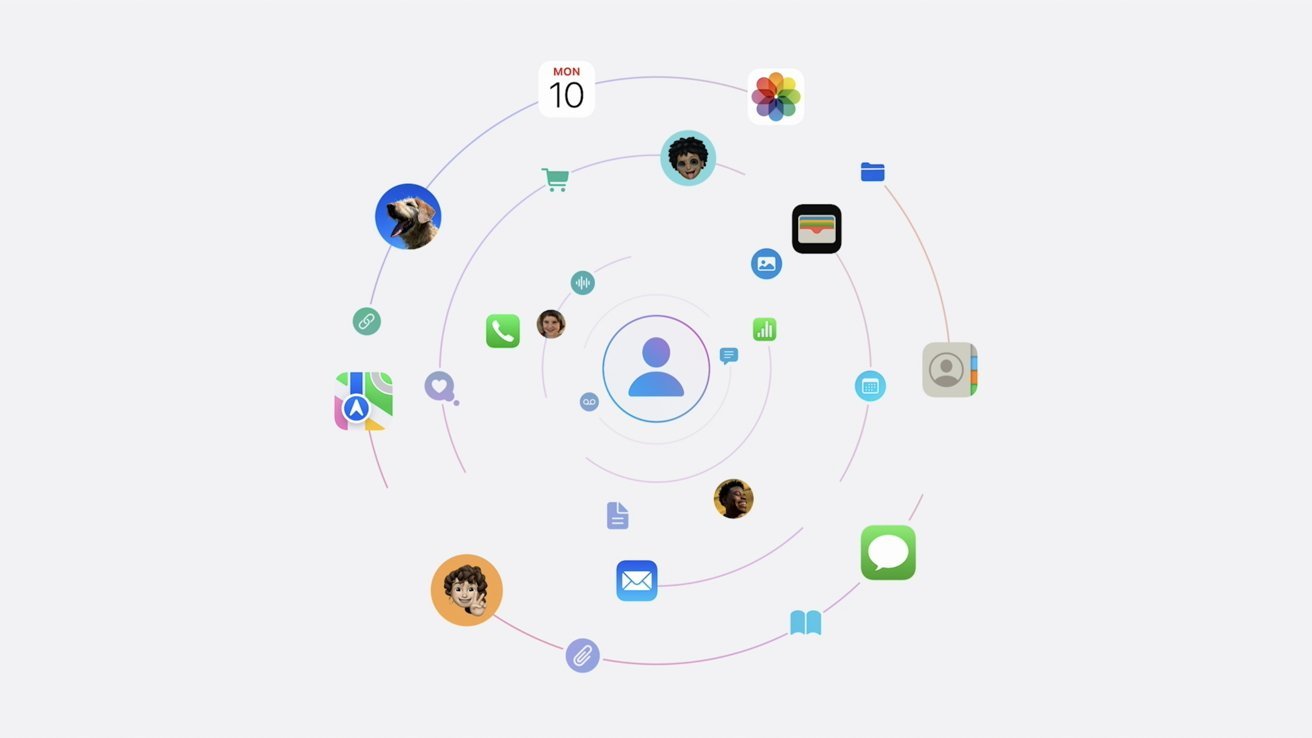

Apple plans to introduce its own version of AI starting with iOS 18.1 – Image credit Apple

A new paper by Apple’s artificial intelligence scientists finds that engines based on large language models, such as Meta and OpenAI, still lack basic inference skills.

The group proposed a new benchmark, GSM-Symbolic, to enable others to measure the inference capabilities of various large-scale language models (LLMs). Their initial tests revealed that small changes in the wording of a query could result in significantly different answers, undermining the model’s reliability.

The research group investigated “vulnerabilities” in mathematical reasoning by adding contextual information to queries that humans can understand but that should not affect the underlying mathematics of the solution. As a result, we received a variety of answers, which should not be the case.

“Specifically, the performance of all models will decrease.” [even] “If only the numerical value of the question changes in the GSM-Symbolic benchmark,” the group wrote in its report. “Furthermore, the weaknesses of mathematical reasoning in these models [demonstrates] We found that performance degrades significantly as the number of clauses in a question increases. ”

The study found that adding a sentence that appeared to provide relevant information to a particular math question could reduce the accuracy of the final answer by up to 65 percent. “There is simply no way to build a reliable agent on top of this foundation. Just changing a word or two or adding a bit of extraneous information could give you a different answer. ” concludes the study.

lack of critical thinking

A particular example of this problem was a math problem that required a true understanding of the problem. The task the team developed, called GSM-NoOp, resembled math word problems that elementary school students might encounter.

A query started with the information needed to formulate a result. “Oliver picks 44 kiwis on Friday, 58 kiwis on Saturday, and twice as many kiwis on Sunday as Friday.”

The query then adds a clause that seems related but has no real bearing on the final answer, saying that of the kiwis harvested on Sunday, “5 of them were a little smaller than average.” “It was,” he points out. The required answer was simply “How many kiwis does Oliver have?”

The note about the size of the portion of kiwis harvested on Sunday has no relation to the total number of kiwis harvested. However, OpenAI’s model and Meta’s Llama3-8b subtract 5 small kiwis from the total result.

This flawed logic was supported by a previous study from 2019 that could reliably confuse an AI model by asking questions about the ages of the last two quarterbacks who played in the Super Bowl. By adding background and relevant information about the games they played, and a third person who was the quarterback in another bowl game, the model generated incorrect answers.

“We found no evidence of formal inference in language models,” the new study concludes. Although the behavior of LLMS is “better explained by sophisticated pattern matching,” the study “found it to be quite fragile in practice.” [simply] Changing the name may change the results. ”